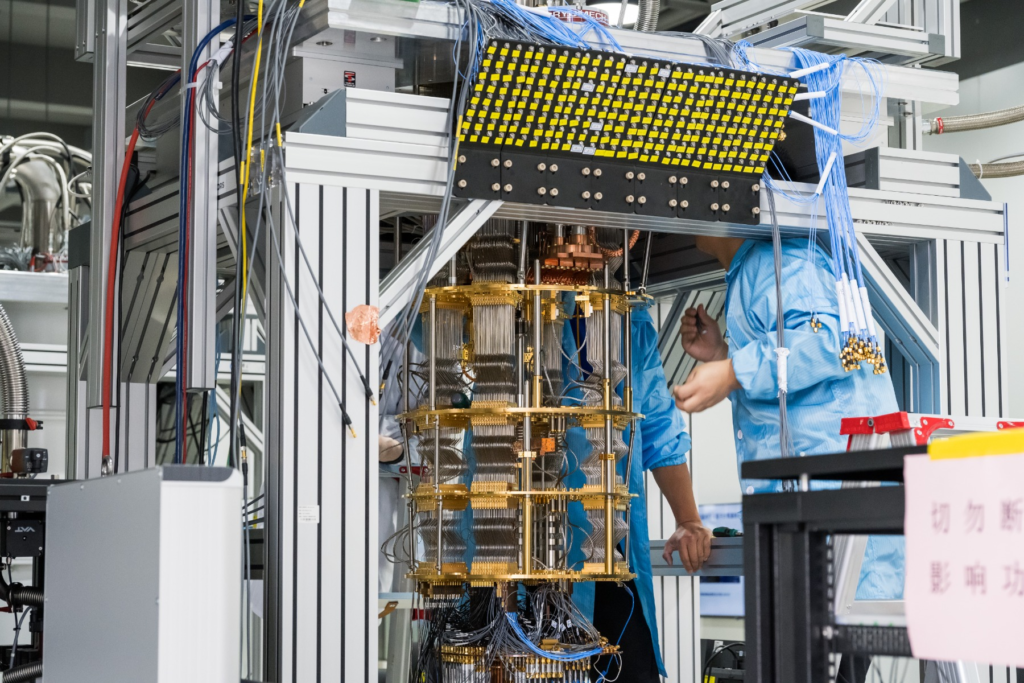

Researchers at the Anhui Quantum Computing Engineering Research Center have completed the world’s first fine-tuning of a billion-parameter AI model using a superconducting quantum computer. The experiment ran on Origin Wukong, China’s third-generation superconducting quantum system, powered by a 72-qubit chip developed domestically. The quantum processor executed large-scale parallel quantum tasks to accelerate fine-tuning, yielding improved performance on both psychological dialogue and mathematical reasoning benchmarks.

The study showed that even after reducing model parameters by 76%, training effectiveness increased by 8.4%, and accuracy on a math reasoning task rose from 68% to 82%. The results suggest quantum resources can complement classical LLMs in parameter-efficient tuning. The chip’s capability to support high-throughput quantum task execution was central to enabling these gains. According to Origin Quantum, the system generated hundreds of parallel quantum tasks per batch and leveraged hybrid processing to optimize output.

This marks the first reported instance of a superconducting quantum computer being used to fine-tune a real-world billion-parameter language model, signaling new potential in quantum-accelerated AI training. Since its launch in January 2024, Origin Wukong has completed over 350,000 tasks spanning fluid dynamics, finance, and biomedicine, with users from 139 countries accessing the system remotely.

Full article available here.

April 7, 2025