Researchers from Google Quantum AI, the California Institute of Technology, and Purdue University have published a paper introducing families of generative quantum models. The research, available on arXiv, establishes that both learning and sampling can be performed efficiently in the beyond-classical regime, opening new possibilities for quantum-enhanced generative models with provable advantage.

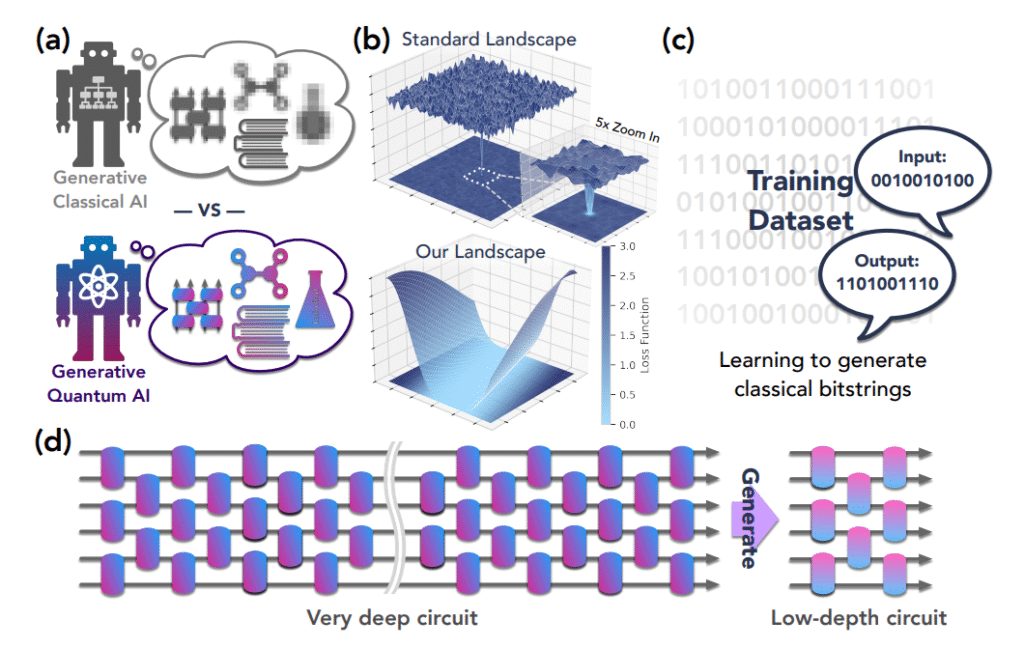

The models are designed to be efficiently trainable, with a framework that avoids barren plateaus and local minima in their training landscapes, while being difficult to simulate classically. Using a 68-qubit superconducting quantum processor, the team demonstrated these capabilities in two scenarios: learning classically intractable probability distributions and learning quantum circuits for accelerated physical simulation. This work introduces an exact mapping between families of deep and shallow circuits, as well as a “sewing technique,” a divide-and-conquer learning algorithm that simplifies the learning landscape.

The research addresses a key challenge in quantum machine learning by demonstrating that both learning and sampling can be performed efficiently in the beyond-classical regime. The ability to efficiently identify a constant-depth circuit representation for a general circuit is shown to require a quantum computer. This work provides a foundation for the development of quantum-enhanced generative models with a provable advantage.

Read the paper on arXiv here.

September 15, 2025