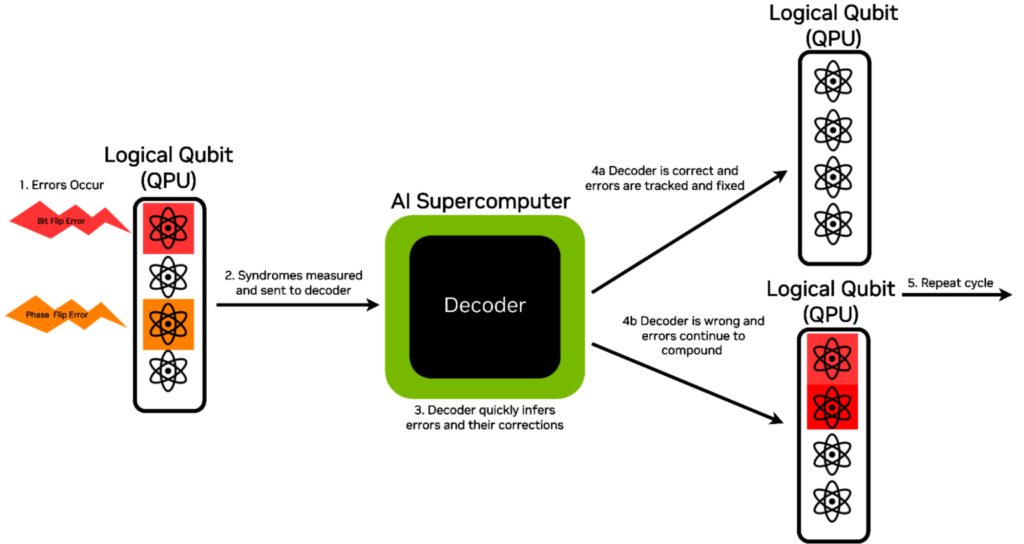

NVIDIA and QuEra have developed a transformer-based AI decoder to improve quantum error correction (QEC) by significantly accelerating and scaling the decoding process. Built using the NVIDIA CUDA-Q platform, this decoder surpasses state-of-the-art most-likely error (MLE) decoders in both performance and scalability. Benchmarking on QuEra’s neutral atom quantum processing unit (QPU) demonstrated the AI decoder’s ability to process error syndromes with higher accuracy, lower latency, and increased fault tolerance, making it a crucial step toward practical, large-scale quantum computing.

The NVIDIA AI decoder addresses the key bottleneck in QEC decoding, where error syndromes from multiple physical qubits must be processed in real time to infer correction operations. Unlike traditional methods, which become exponentially slower with increasing qubit count, the transformer-based decoder leverages graph neural networks (GNNs) and attention mechanisms to efficiently model dependencies between syndromes and logical qubits. This structure allows the decoder to learn from synthetically generated syndrome data, reducing the reliance on costly experimental QPU runs. Initial results show that for QuEra’s distance-3 magic state distillation (MSD) circuit, the NVIDIA AI decoder outperformed the MLE decoder, producing higher-fidelity magic states while maintaining lower latency.

Magic state distillation (MSD) is a core component of fault-tolerant quantum computing, used to generate high-fidelity magic states required for universal quantum computation. QuEra’s experiment successfully encoded 35 neutral atom qubits into five logical magic states, which were then distilled into a single, higher-fidelity magic state using a 5-to-1 MSD protocol with the [[7,1,3]] color code. The key innovation was the implementation of correlated decoding, where error syndromes were analyzed collectively across all logical qubits rather than independently. This method significantly improves decoder accuracy by correcting correlated errors caused by logical two-qubit gates. However, MLE-based decoders do not scale beyond small code distances, requiring alternative approaches like NVIDIA’s AI-driven method.

To achieve practical fault-tolerant quantum computing, higher-distance QEC codes are required to reduce logical error rates. However, MLE-based decoding times exceed practical limits beyond distance-3, making them infeasible for distance-5 and beyond. NVIDIA and QuEra aim to scale the AI decoder using AI supercomputing resources, particularly the NVIDIA Accelerated Quantum Research Center (NVAQC) and the NVIDIA Eos supercomputer, which can generate up to 500 trillion data shots per hour. The NVIDIA team has developed novel sampling algorithms that enable 1-million-fold faster synthetic data generation compared to previous methods. Training the distance-3 AI decoder required 42 H100 GPUs and completed in one hour, while scaling to larger code distances will demand the latest NVIDIA Blackwell GPUs and highly parallelized AI architectures.

This AI-powered decoder represents a major step toward scalable fault-tolerant quantum computing, solving key computational bottlenecks in quantum error correction and logical qubit encoding. NVIDIA and QuEra’s continued advancements in AI-driven QEC, large-scale simulation, and parallelized decoder architectures will be crucial for unlocking practical quantum applications at the scale of millions of qubits.

For more details, visit the original NVIDIA announcement here.

March 21, 2025