By Carolyn Mathas

Mark Twain is said to have uttered “History doesn’t repeat itself, but it often rhymes,” we may observe such rhymes when comparing the advent of classical computing and today’s evolving quantum computing. To consider the past 70 years in the history of classical computers and the possibilities of quantum computing mirroring it, it’s important to consider their underlying and enabling technologies, the development of a culture of entrepreneurial spirit, and ultimately how the masses did.

This comparison will begin in the fertile orchards of Santa Clara County, California and end in the cloud.

Transistors to Microprocessors

Since the 1930s, it was the vacuum tube that ran electronics including the warehouse-size ENIAC, the first general-purpose computer unveiled shortly after WWII. Instrumental in our history is William Shockley of Bell Telephone Laboratories who in 1947 was one of the inventors of the transistor. The transistor enabled greater performance and could be widely used based on its small size and low power requirements. By 1955, Shockley left Bell Labs, moved to the Santa Clara Valley, and founded Shockley Semiconductor hiring such eventual stars as Gordon Moore, Jay Last, and Bob Noyce—their goal to create a commercially viable silicon transistor, replacing germanium which would fail at high temperatures.

Unfortunately, Shockley’s management style and focus did not match that of his team, and his eight stars defected. This would become a consistent trend that would last for decades. However, because corporate culture until then dictated that one would join a company, retiring from it decades later, they were called traitors.

A perfect incubator environment was developing in the valley. Stanford was giving away land to companies, there was huge defense spending based on the Russian threat, and when Russia launched Sputnik, U.S. space efforts were blindsided. In 1958, NASA was launched in response garnering a 400 million dollar budget—which became a huge opportunity for newly formed Fairchild Semiconductor—where the eight defectors happened to land.

By March 1961, Fairchild introduced its first commercial integrated circuit, and the microchip would change the electronics landscape. Fairchild won the contract to supply chips for NASA’s Apollo Guidance Computer. Yet, later on in the 1960s all the members of the founding team plus many researchers and engineers had left Fairchild to start new ventures. Robert Noyce and Gordon Moore and Andy Grove left Fairchild in 1968 to start Intel where “Ted” Hoff created the first microprocessor, Intel’s 4004 released in November 1971. This single chip that could be programmed for a specific application and computers that were once the size of a refrigerator could now fit on a fingertip

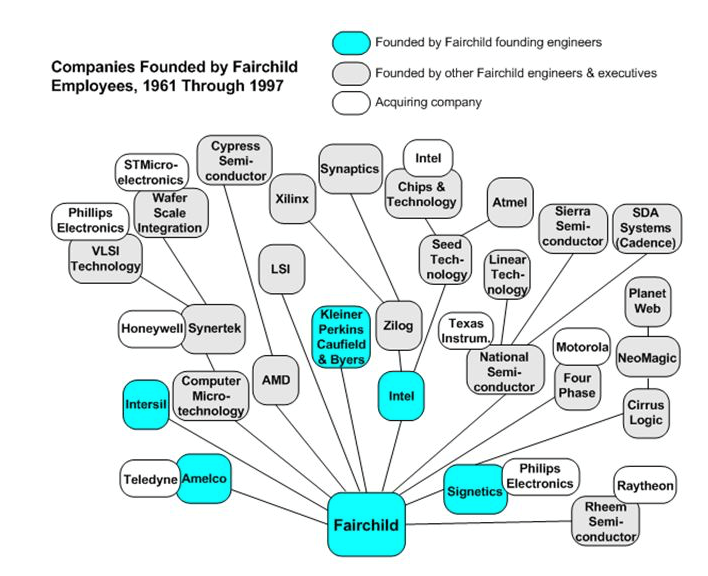

Fairchild Semiconductor spawned so many companies, they were dubbed Fairchildren. According to a report by analyst firm Endeavor Insight, of the more than 130 San Francisco Bay Area tech companies trading on the NASDAQ or the NYSE in 2014, “70 percent of these firms can be traced directly back to the founders and employees of Fairchild. The 92 public companies that can be traced back to Fairchild are now worth about $2.1 trillion.”1

Estimates are that 90% of startups across all categories fail and Silicon Valley had its share. However, several companies that took root in the soil of bygone orchards represent impressive success.

The PC Blueprint

Another example of a technology success was the introduction of the IBM personal computer, which was a game-changer. IBM introduced its PC in 1981 based on an “open architecture” that enabled developers to freely add hardware and software. IBM used a microprocessor from Intel and a Microsoft computer operating system making it easy for the PC to be reverse engineered. Compaq was formed soon thereafter and quickly became one of the best producers of IBM compatibles, shipping 53,000 portable PCs and raking in $111 million in its first full year of production.

Quantum Computing

Let’s see how much rhyme or repetition as well as the differences there is between quantum computing and these previous examples. We will look at three criteria that we think are important technologies —the underlying enabling technologies, the entrepreneurial culture, and the question of access.

Enabling

One important difference we should point out right off the bat is that today’s researchers and quantum technologists have cut their proverbial teeth on classical computing technology. This provides a large base of technology and experience they can lean on. Noyce et al grew up in a world completely devoid of electronics, making their successes even more mindboggling.

According to Bob Sutor, Chief Quantum Exponent at IBM, “So here we are with 70+ years of experience with classical computing and there is no reason to repeat another 70 years to do this with quantum computing. We’ve seen this movie before. So, what did we learn the first time around that we can apply this time?”

One thing is that there are many flavors of hardware with the same goal—it was true with classical computers and now with quantum ones as well. “From a hardware perspective, the actual qubits themselves as in the case of IBM can be a superconducting device, or an ion trap device, a photonic device. They are surrounded by a lot of classical computing,” said Sutor.

“From that perspective, anything that we can use to control the quantum computer is classical computing. You can’t say that quantum computing is what classical was in the 1950s. Quantum computing is quantum plus classical. There is only a small amount that is quantum. We’ve taken the learning experience and the technology of classical computing with which to surround our purely quantum parts,” he said.2

For example, when working an algorithm, a high percentage of that code is classical, and a small percentage is quantum. So, introducing this notion of blended or integrated quantum and classical, it’s not a pure contrast. This vs. that, it’s the integration. This type of blending of technologies was not quite the same as classical computing was developing.

Another example is in the software. Python is used as the base for creating programming languages for quantum computers. Python has been around for approximately 20 years. The industry didn’t come up with a brand-new high-level programming language to program quantum systems. The goal is to leverage what exists to simplify as much as possible writing programs for quantum computers. In classical computing, the first programming languages of Fortran and Cobol were developed from scratch and had no predecessors that they could be based upon.

Culture

Quantum technology is taking place in a different time and place—but is surrounded by the fruits of its classical technology predecessors. Quantum tech, still pretty much in its infancy, will repeat many of the stages surrounding innovation and entrepreneurship. The phrase “Silicon Valley” was synonymous with openness and risk-taking and we still see this in place today with quantum computing.

Silicon Valley in the early days was the first time that it was okay not to stick with one company for 30 years until retirement. It became common, as a result, for startups to happen, fail, and the entrepreneurs move on to start a new venture. Careers were no longer ruined due to a failure.

One difference we see is in the speed of communication. If a research group makes a breakthrough they can post a paper on arXiv and then Tweet about it so that the world knows about it in a few days. When the semiconductor industry was in its infancy, this type of news took weeks or months to get around unless you were located in Silicon Valley. The reason there was such a concentration of semiconductor firms in Silicon Valley is that the engineers and researchers would get together socially at the local bars and talk about the latest gossip. Exchanging the latest news is still happening as much as ever but one doesn’t need to go to the local bar to do it. Now quantum researchers can use Zoom or Telegram or Clubhouse to talk about the latest rumors. So being geographically located within a technology cluster is not as important.

Access

A big difference between the early classical computing industry and quantum is how you access it. Most classical computers were installed on-premises whether they were mainframes or portable computers. There were a few dedicated timesharing services like Compuserve in existence but these disappeared with the advent of the personal computer and the internet. Access to quantum computing has been very different. Cloud computing technologies have taken off over the past 20 years and this has been the de facto way for someone to utilize a quantum machine from the very beginning. Some have talked about shrinking the size and maintenance requirements of a quantum computer and making it available for on-premise installations at individual organizations in the future. But the number of these installations will nowhere near come close to the hundreds of millions of units that we saw with laptop computers. Instead, there will be hundreds of millions of applications that access quantum computers across the cloud.

The Crystal Ball

Classical computing spawned a huge social media industry that seemed to come out of nowhere.

What is the quantum equivalent? Whatever it will be it will likely be an AI question more than a quantum one. The reality is that for users, access and use of quantum technology to answer their most difficult risk-based questions will likely be performed behind the scenes unbeknownst to that user rather than be viewed in real-time like we have today with interactive social media.

For the developers, however, it will be an exciting ride, akin to that of early classical computing. Although in this case history won’t likely repeat itself verbatim, just watch for the rhymes.

References

- TechCrunch. “The First Trillion-Dollar Startup”, July 26, 2014.

- Private conversation.

November 17, 2021