by Doug Finke and David Shaw

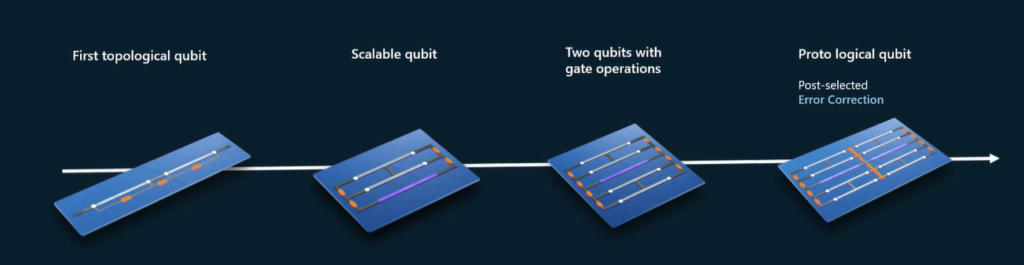

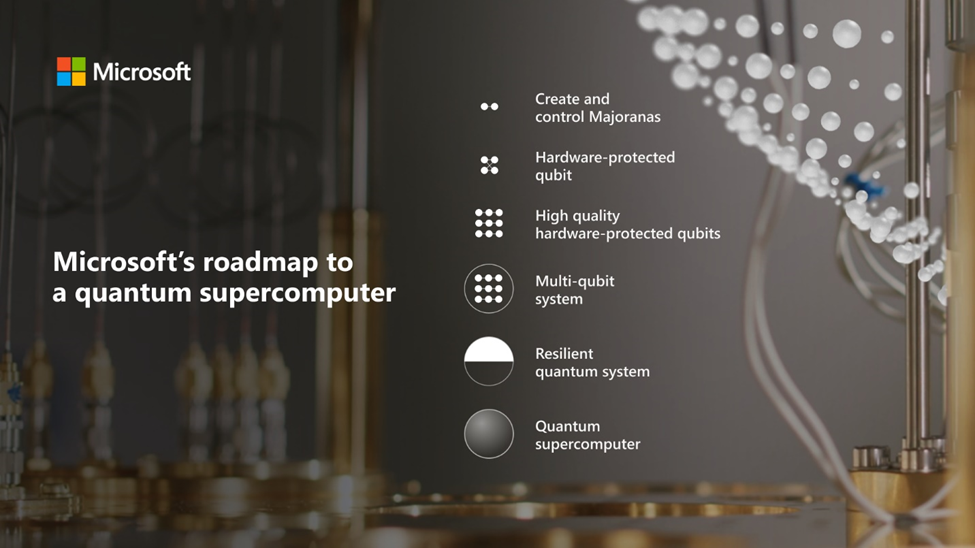

Last June, Microsoft announced a roadmap to a quantum supercomputer that consisted of six stages as shown below:

They indicated that they had completed the first stage, Create and control Majoranas, and had provided technical data in a paper published in the Physical Review B titled InAs-Al hybrid devices passing the topological gap protocol. We wanted to better understand the subsequent steps in this roadmap and talked to Dr. Chetan Nayak, Technical Fellow at Microsoft, who explained it to us in more detail.

Microsoft’s presentation of topological qubits is now less theoretical and much more pragmatic. The resulting roadmap promises a machine that is more modest than the earlier topological-hype that some may have suggested. GQI thinks it seems more realizable and interesting as a result.

The first thing to understand is that topological qubits do not eliminate errors entirely. Rather, Microsoft believes they will be able to exhibit error rates that are perhaps an order of magnitude or more smaller than error rates inherent in other quantum modalities. Microsoft’s current near-term goal to is demonstrate qubits that have error rates of approximately 10-4. In comparison, the best superconducting or ion trap systems are currently at somewhere between 10-2 and 10-3, typically with roadmap plans to improve to 10-4.

Microsoft is thus targeting error rates not so different to those being targeted by other FTQC roadmaps. However, at a time when many other programs seem stalled in their attempts to practically achieve these improvements, GQI welcomes a new practical approach and avenue of attack on this sectoral challenge.

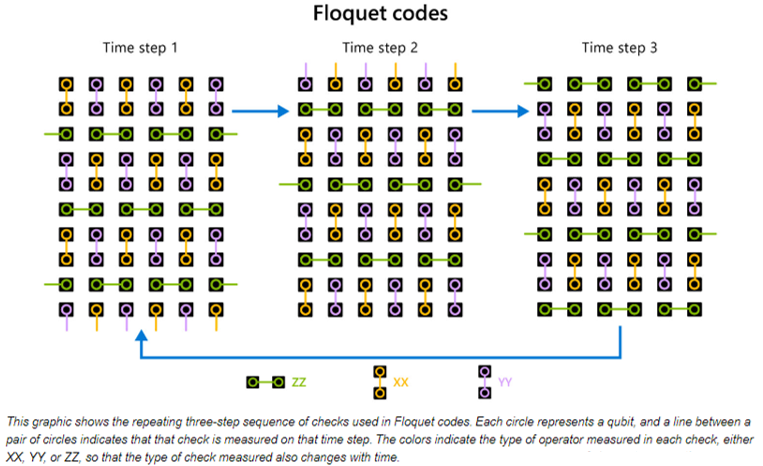

They will couple this low physical error rate with a Floquet error correction code to hit a near term goal of 10-6 for a logical error rate. At that point, they believe they will start being able to use a topological quantum computer for quantum advantage in some applications. Longer term, they hope to continue to improve the technology to achieve a logical error rate of 10-12 or better so they can run very complex quantum algorithms such as Shor’s algorithm. Microsoft foresees that some of these very complex algorithms may run on a machine for a month or more. Although that would be expensive, if it produces the formula for a blockbuster new drug, it would be worth it.

A simultaneous goal is to make a device which is small, fast, and controllable. They are targeting physical clock speeds in the tens of megahertz which is similar to the delays of some of the fastest superconducting machines and much faster than those currently in ion trap machines. Microsoft wants to avoid a technology that will require networking hundreds of machines together in a large data center in order to create a platform that will support hundreds of thousands of physical qubits.

A key parameter that they want to improve is called the topological gap. In their research paper, they fabricated a device using a heterostructures of indium arsenide (InAs) and aluminum (Al) and measured a topological gap of 20-60 micro electron-volts (µeV). This level is sufficient to demonstrate that the superconducting nano wires have Majorana zero modes at their ends and they can reliably produce a topological phase of matter.

It turns out there is an inverse exponential relationship between the error rate and the topological gap. So, the next phase of Microsoft’s research is to look at different materials and design variations to use in order achieve a higher topological gap and lower error rate.

GQI views the achievement of a realistic topological gap as a key differentiator between Microsoft’s hardware platform and those that have merely demonstrated non-Abelian anyons in software systems. It’s this gap that provides protection against interaction with the environment and leakage of the qubit outside of the computational space. From a supply chain perspective, it’s interesting to see another leading quantum platform leveraging III-V semiconductors. Whether Microsoft can move beyond this level of performance is a key watch point.

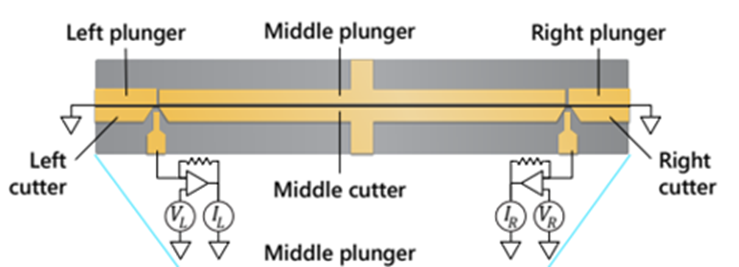

Qubits are composed of a pair of Majoranas, e.g., two separate nanowires. Currently, the pairs are arranged in collinear fashion and they are investigating using a parallel nanowire arrangement and later more complicated arrangements in what they call an H-Structure. The qubit state is encoded by the parity of the two Majoranas (either Even-Even or Odd-Odd) and this is sensed by a quantum dot which is depicted as the orange dot in the picture below.

So, after completing these developments, Microsoft will have reached their third milestone of creating a High-Quality Hardware-Protected Qubit. High quality means that they have achieved their goal of a 10-4 error rate on the qubit.

The fourth milestone of creating a multi-qubit system will put together roughly 4 or 8 qubits together to demonstrate a small system. This will include control electronics that will control the operations of the qubits.

The fifth milestone will scale the number of qubits to create a demo of a logical error corrected qubit. The Microsoft architecture is based on a technique called Measurement-Only Topological Quantum Computing. This has one important difference from other measurement based quantum computing architectures, it doesn’t need to prepare an entangled initial state (called a “source state”) that would be required in other measurement based quantum computers. A measurement-based quantum computer is also universal and can solve any problem that can be done with a gate-based quantum computer.

With in this fifth milestone Microsoft will also be implementing their Floquet based error correction code, specifically the Honeycomb code, which is compatible with the measurement-based quantum computing approach we just described. Floquet codes only require nearest neighbor connectivity and at this stage Microsoft will be able to demonstrate gates between logical qubits. They will also be able to generate T-gates using Magic State Distillation. Additional information about the use of Microsoft’s Floquet codes is available in a blog article here as well as technical papers here and here.

GQI notes that Microsoft has joined the growing band of players who are seeking approaches to FTQC beyond the standard surface code model, and in particular codes tailored to their specific hardware. Based on novel ‘dynamic’ logical qubits, Floquet codes certainly seem suited to MBQC using Majoranas. Conversely, they don’t immediately offer the same benefits claimed by proponents of high-connectivity LDPC codes. This is a hot area of innovation.

The last stage in Microsoft’s roadmap which they call Quantum Supercomputer would involve scaling this technology up to achieve more qubits and even lower logical error rates. Microsoft’s goal for this would be to achieve a reliable quantum computer with more than 100 logical qubits with an error rate of 10–12 or better. At this level, they believe that performing computations like the Hubbard model used for simulating correlated materials becomes possible.

Viewing existing measures such as quantum volume or logical qubit count as insufficient, Microsoft has defined a new performance metric called rQOPS which is calculated as rQOPS=Q x f, at a corresponding logical error rate pL. In this formula Q is the number of logical qubits and f is the logical clock speed. Microsoft believes that this metric encompasses the three key factors important for a quantum computer of scale, speed, and reliability. Their initial goal is to achieve an rQOPS measure of 1 million.

Microsoft’s critique of existing metrics notwithstanding, this could be seen as comparable to a machine that provided 100 logical qubits at a logical cycle time of 100 microseconds (10 khz.) and a logical error rate of 10-12 or better. This should be seen as comparable to the machines envisaged by other player roadmaps for ‘Early FTQC’. This is not the ‘Goliath FTQC’ required to break current cryptography, or the ‘Turbo FTQC’ required to unlock benefits from more marginal quantum algorithms, but it does look like a realistic plan for a very interesting device.

Another key technology that Microsoft is developing is targeted at simplifying control logic implementation. They are developing a cryo-CMOS control chip that will provide the necessary pulses to control the qubits. This chip will be low power so that it does not cause excessive heat inside the dilution refrigerator. One of the techniques that Microsoft will be doing to reduce the number of readout lines is to multiplex the readout lines with a ratio of 1000:1.

GQI views a realistic scalable roadmap for control plane and control logic scaling as a key challenge for many FTQC roadmaps. Microsoft’s ability to implement here will be a key point to watch.

It is clear that Microsoft has a definite plan and roadmap to achieve a powerful quantum computer. It helps to have definitive goals on what will be needed for this and the company is leveraging their software skills and their Quantum Resource Estimator to allow them to set appropriate targets. But, in the end, a lot will depend upon execution and getting the details correct in order to ensure the roadmap is maintained on a timely basis.

For more information about Microsoft’s quantum technology and roadmap, we would recommend a blog posted on the Microsoft Azure Quantum web page here. In particular, it includes two videos that are quite enlightening. The first is a short high level overview of their Majorana technology. And the second is a detailed presentation from Dr. Nayak that describes their research on Majoranas to show that the phenomena is real and can be utilized to developed reliable qubits.

September 21, 2023